The film is set in a future where human society is transformed by harsh biological realities and civilization has shrunk to a few scattered, encapsulated communities clinging to the memory of greatness.

Adam, as our main character, was the starting point of our visual design process. He was designed to provide a glimpse into the complex backstory of the world, by revealing himself as a human prisoner whose consciousness has been trapped in a cheap mechanical body.

My computer can't even handle playing back the video without a bit of stutter. It's like a message will pop up any second telling me "this isn't something I can even fathom playing back for you in a timely fashion." I can imagine what a $600 graphics card could do with this technology.

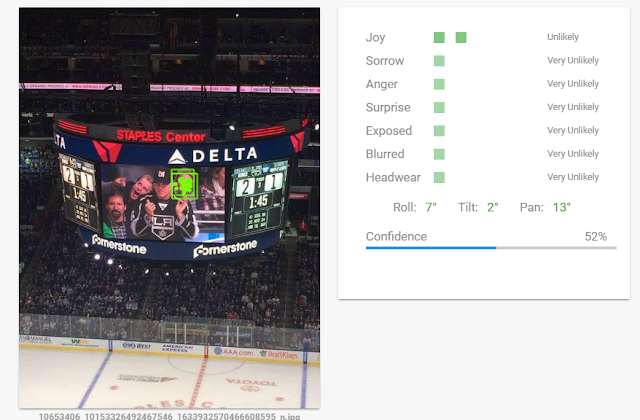

The amount of thought and depth that went into this short demo is staggering. What I really find interesting is the concept art and reference sheets. Google Goggles and Machine Vision seems like a great tool for building these otherworldly characters. Take a picture of someone and it will classify, recognize color and text, identify brands and bar codes, and search for related images. We could tweak the Cloud Vision or Microsoft Cognitive Services Computer Vision API technology to generate these reference sheets automatically, and provide additional insight and ideas to the realtime art director.

What if a tiny device sitting in the middle of your living room could model the room with 360 depth video, add ceilings, floors, render light sources, color match, visually classify the contents and determine what would look just a little bit out of place yet matches the color, lighting scheme, and design aesthetics of the space.

The tough problem is no longer modeling a room in realtime - I can do this with a Kinect and Skanect in about 5 minutes.

|

| Well, there's still a few kinks to work out... |

The tough problem is making this technology portable, non-intrusive, and insensitive to light sources like the Sun. The ability to use laser technology to capture accurate depth and distance across hundreds of yards of indoor or outdoor terrain. A way of bringing the experience to the individual, rather than bringing the individual to the experience.

What if you could take an experience like watching a hockey game, render it realtime in VR, add binaural audio and throw in a few of your closest friends from around the world.

There's still some room to grow with the APIs though. I'm pretty sure there's plenty more Joy, Sorrow, Anger, Surprise and Headwear at this hockey game.

https://blogs.unity3d.com/2016/07/07/adam-production-design-for-the-real-time-short-film/

https://unity3d.com/pages/adam

Assets here

https://blogs.unity3d.com/2016/11/01/adam-demo-executable-and-assets-released/