I was blown away.

After downloading a few apps for my Sony Xperia phone and almost melting the phone itself, I was amazed at how the seemingly simple visual magic trick of splitting a screen into a screen-per-eye immersed you in an alternate reality. Everyone I showed it to was similarly impressed. I tried it with a new set of Bluetooth headphones and was standing on stage listening to a Binaural immersive audio concert and watching Paul McCartney play Live and Let Die on the piano a couple feet from me.

So why isn't everyone talking about it and wearing these things outside?

Well, Cardboard VR looks pretty silly. It's, um, cardboard. My kids liked it, yet they don't talk or ask about it as they do with the IPad, XBox, or even Pokemon. It heats up my phone and chews up valuable memory space. It's blurry. You have to hold it to your face. It doesn't have a lot of easily-discoverable content. You have to start an app from your device before you put it on to get it going. There is no keyboard or mouse. Talking to yourself (since you can't really see a device) isn't like talking to Alexa or Siri or Ok Google or Xbox.

Perhaps my kids would use it more if we had a more professional headset or "kid-friendly" one that didn't require a phone. I don't think so though. In any case, it's probably worse than an IPad in terms of the health and mental changes it would introduce to children, so I don't think it would be a good idea to let my kids play with it anyway.

"With appropriate programming such a display could literally be the Wonderland into which Alice walked." -- Ivan Sutherland

Running a dual split-screen display on a mobile device will burn your battery like no tomorrow. At up to 90fps for a good VR experience, it's no wonder you need a $1000 gaming rig to get the best experience out of some of the VR devices like Oculus Rift and Steam VR.

Searching Github for VR brings 17,000 repos. GoogleVR is number 3 returned best match. Facebook 360 is up there. WebVR and WebGL are going crazy. There's the Jahshaka VR Content Creation Suite. 360 Video is the Next Big Thing™.

So why is Virtual Reality stuck in the Trough of Disillusionment?

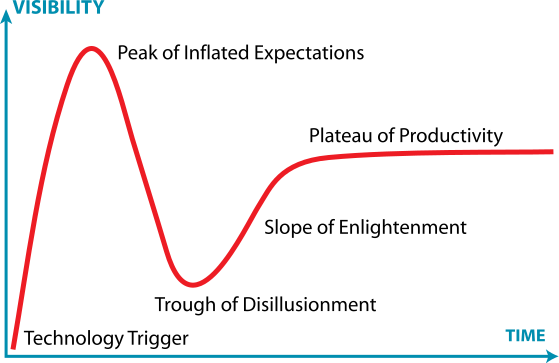

The Gartner Hype Cycle shows that technologies flow in cycles over time. At the Peak of Inflated Expectations, technology and startups are "changing the world" and the press is clamoring for articles and content on the technology. Everyone may not have the tech, everyone wants it and they don't really know why. There are successes and many more failures. Early adopters make-or-break the product or technology based on their loyalty, interest, and evangelism.

And then a quick dip into the Trough of Disillusionment, where interest wanes as promises are not delivered. Investors didn't get the quick return they were looking for. Maintenance, Support, and Legal aspects of the technology start creeping up. Everyone wants a piece of the pie, and the pie is getting a bit hard and crusty.

In the news recently, a $6 billion dollar case against Facebook and Oculus was lost, with a judgement for $500 million to ZeniMax. Another case announced a couple days ago. The pigs are feeding at the trough, not to equate anyone to pigs however the saying seems to fit.

I'm a big fan of the 2 Johns (Carmack and Romero) and their impact on the gaming world with id software, They spawned a billion-dollar industry by starting with the concept that Super Mario Brothers 3 should be doable on the PC, outside a protected cartridge on a Super Nintendo. By creating Dangerous Dave in Copyright Infringement, John Carmack brought the concept to reality, while mocking the concept of copyright itself. Shareware was in business.

John's .plan files are an interesting historical trip through the mind of a game developer and 3D pioneer.

"Well, I have learned enough about it. I'm not going to finish the port. I have better things to do with my time." - John Carmack

His OpenGL position in January, 1996 tells a story about the state of the art in 3D and the heated battle of competing 3D standards within the hardware and software vendors and developers of the time.

John Carmack, May 14, 1997 .plan file.

"I am still going to press the OpenGL issue, which is going to be crucial for future generations of games." - John Carmack

In 1996, 3dfx Voodoo cards were awesome, and I still have one in my basement shop tech graveyard. They surpassed console and arcade hardware on the PC. Mine was unstable as hell, overheated my cpu, was incompatible with some games and crashed my PC all the time. The bang for the buck and wow factor overcame that.

Matrix, a company based in Quebec, had their high-end prestige Millenium cards and released Mystique to compete with the Voodoo's price-point. Nicknamed the "Matrix Mystake" it didn't hit the mark. Nvidia and ATI, a Markham, Canada company, took over the market. NVidia bought 3dfx. Matrix is still The video card industry had just started its exponential rise to meet the demands of gaming and video software. Technology was diverging and converging, and commoditizing. The climb to the Peak of Inflated Expectations was occurring.

"Many things that are a single line of GL code require half a page of D3D code to allocate a structure, set a size, fill something in, call a COM routine, then extract the result." - John Carmack

In 2011, Carmack suggested that Direct3D had surpassed OpenGL, though he still wouldn't use it.

In 2015, Microsoft brought DirectX 12 to Windows 10.

Lead developer Max McMullen, stated that the main goal of Direct3D 12 is to achieve "console-level efficiency on phone, tablet and PC"Vulkan is a "closer-to-metal" API for hardware-accelerated graphics, and operates at a lower-level than OpenGL . At the time, Valve suggested it made no sense to use Direct3D 12, and to stick with Vulkan, though it couldn't be used commercially(?). Direct3D 12 was really the only commercial option.

Also in 2015, Microsoft announced GPU Capabilities in Azure.

2016. OpenGL vs. Direct3D: Who's The Winner of Graphics API

2017. OpenCL, OpenGL, OpenVX, Volkan, WebGL. DirectX12. However, gamers are no longer the only consumers of leading-edge graphics technology. AI, Deep Learning, and GPU compute are the key use cases for the technology. Clusters of machines running high-end GPUs are no longer used to display graphics at all. Display Drivers have become Virtual Compute Drivers. Microsoft has released the Azure N-Series NVIDIA CPU Virtual Machines.

So back to VR. The key mainstream platform for graphics and VR in 2017 is mobile. The key mobile device is Android. And Android runs OpenGL. Macs run OpenGL. Does that mean OpenGL wins?

As Facebook says, It's Complicated. Valve developed a wrapper to translate Direct3D to OpenGL. Unity will produce both OpenGL and DirectX. WebGL and WebVR are slowly becoming platform-agnostic mainstream technologies. DirectX is still Windows, and OpenGL is still everything else.

The Slope of Enlightenment will come to VR, and it will be when software, or virtual hosted hardware replaces local hardware. When DirectX will support accelerated 3D over a network similar to VirtualGL, or Desktop Cloud Visualization. When the requirements for immersive virtual reality are that you have to look at an object and its reality is projected onto it, rather than putting on a device that projects it to you.

Push VR will make VR, and more realistically AR, a commercial success and commodity "necessity".