The technology is now addictive and magical, however there is a lot of friction, limitations of movement, lack of perspective and latency.

And putting on a headset is clunky. Space limitations limit movement. Walking on a directional treadmill is odd and not practical. The brain and eyes are too fast to allow for them to be fooled for long periods of time.

The surrounding technology and disruption of AI LLM and diffusion models as they are applied to VR will change this.

Things will change more as people realize that turning randomness and models into procedural thoughts and processes improves results. Generated 3D objects and worlds can instantly replace real ones virtually. These objects can also be printed, to replace real ones with copies that are "good enough" to be real. Projection mapping and pico-sized screens can further the feeling of different realities and perspectives against real-world physical objects.

Randomness in models is still something that we may not need, if we can just precalculate things mathematically, according to Steven LaValle.

Steven M. LaValle is a university prof at U of Illinois Grangier College of Engineering, and former Chief Scientist at Oculus. I found his books reading about the creators of Doom (Carmack, Romero) and started to watch his lectures.

By adjusting the environment around an object, you can control its randomness.

In this case, the width of the walls, volume of the spaces, and angle and height of the arrowed paths control the statistical distribution of moving objects in a space.

Although we cannot control randomness, we can direct it.

We could also capture the results of this direction of randomness and use it to reverse-engineer formulas to control things deterministically rather than in the non-deterministic nature of ML outputs.

Like a planner in Spark or SQL, the input of commands uses statistical properties of data to determine what to do next.

The difference here is the statistics are the unknowns and not the knowns.

The shadows around objects can also be used to understand their placement and direct them.

It is not just the properties of the data which affect the latency and outcome and of the query, it is the relationships to other objects in the space and what surrounds it.

After the predictions of AI and the forward and backward movement of data and objects have done their work, the binary thinking of the traditional computer's reality takes over.

Solutions become less random and more directable, simpler, easier to control.

In addition to movement, gravity, velocity and paths also control sound.

Once we get out of the Marble Machine Rube Goldberg way of thinking, we will have no need for FPGAs, GPUs, or Neural Computing. All the work will be done 'in the display' using newer traditional-like computing methods or some other form of simpler binary logic chips. Or perhaps some "program" running in a liquid or a tree.

VIRTUAL REALITY

By Steven M. LaValle. Cambridge University Press, 2023.

http://www.lavalle.pl/vr/

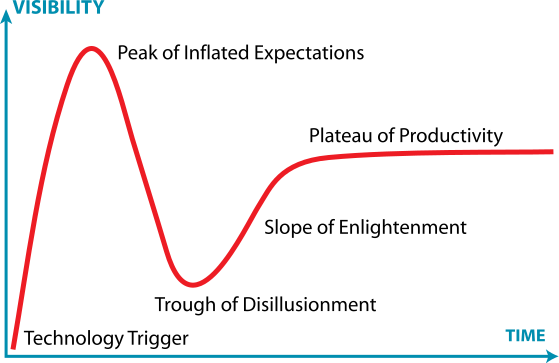

VR was popular in the 80s and 90s but never more than a gimmick until Google Cardboard, Hololens (AR/VR) and others came to market.

Then the Oculus Kickstarter was funded for $2.4m.

Then the Oculus Kickstarter was funded for $2.4m.

"What I've got now, is, I honestly think the best VR demo probably the world has ever seen."

John Carmack, id Software

Then Oculus was sold to Facebook in 2014. At this point, the valuations didn't make sense, the hype cycle was almost at its height.

Ready Player One came out in 2018. Retro computing and 80s nostalgia was at its peak.

And then the VR winter resumed, along with certain other events that affected the world.

https://www.ben-evans.com/benedictevans/2020/5/8/the-vr-winter

We're almost out of the Winter of VR, and the reality is no longer virtual, it is augmented, extra-sensory and overwritten.